A New Frontier in Medicine: AI-Designed Treatments Tackle Neglected Diseases

By Dr. Marco V. Benavides Sánchez.

Introduction:

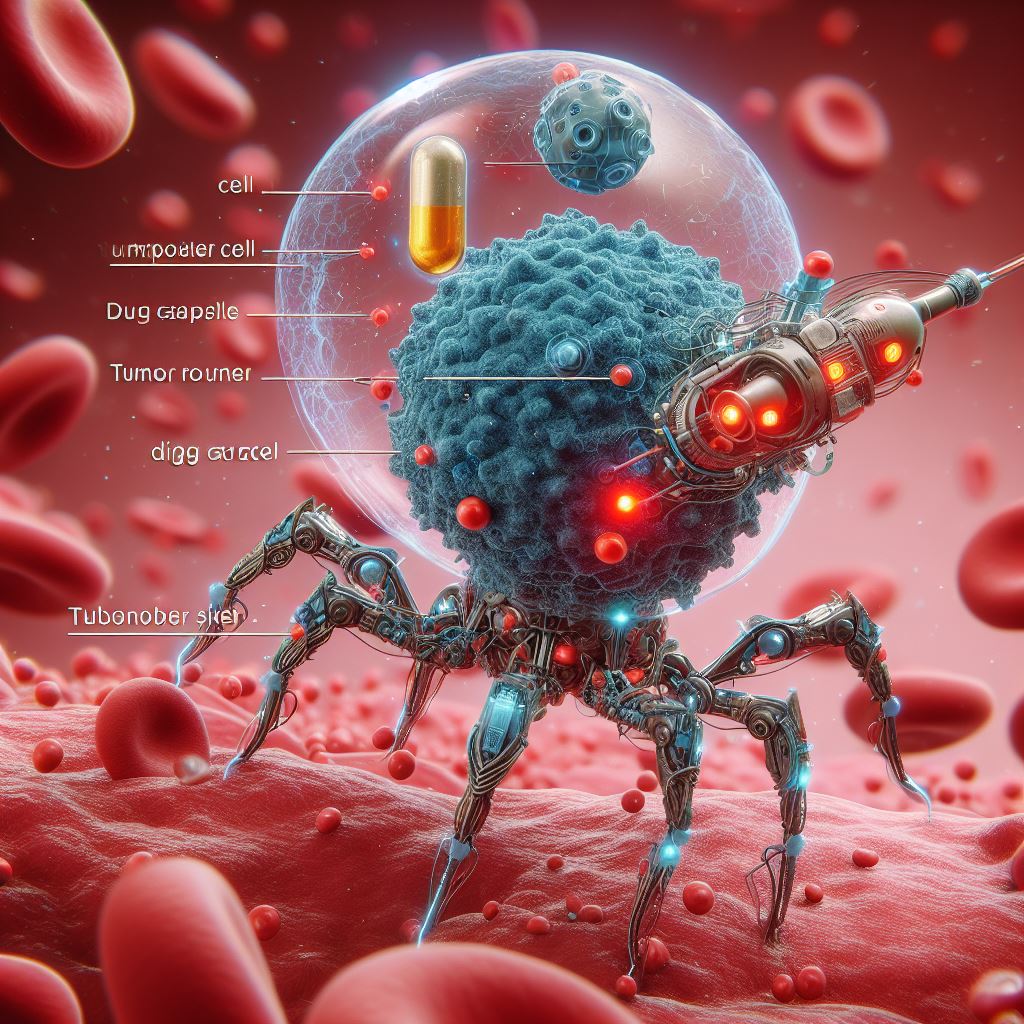

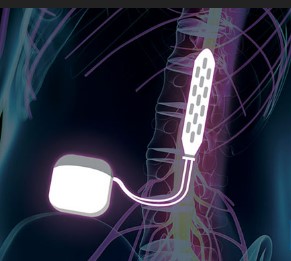

The recent advancements in artificial intelligence (AI) have transcended traditional applications, marking a pivotal shift in the medical landscape. An experimental treatment for snakebite envenoming, a condition often overlooked by pharmaceutical giants due to its predominant prevalence in poorer, rural regions, has been created entirely by AI. This milestone not only heralds a new era in drug development but also illustrates the potential of AI to address neglected health challenges. This article delves into the transformative role of AI in medicine, focusing on its latest achievement and the broader implications for global health.

The Breakthrough:

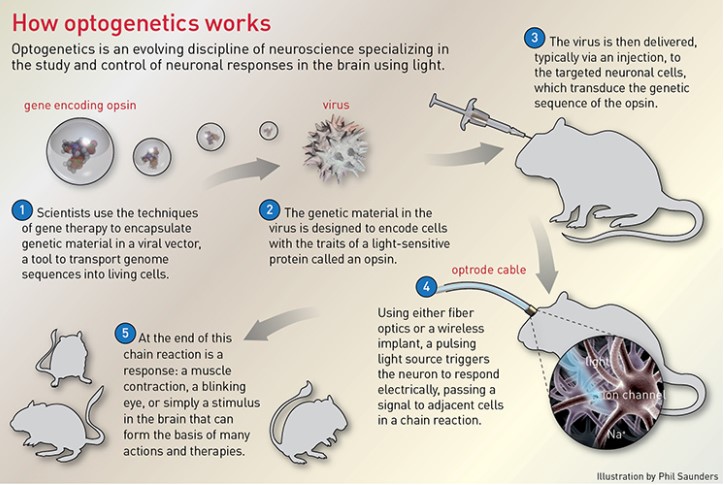

In a landmark development, the laboratory of Nobel Prize laureate David Baker at the University of Washington has successfully utilized AI to design an experimental treatment for snakebite envenoming. By harnessing the capabilities of AI technologies like RFdiffusion and ProteinMPNN, researchers have created novel proteins that can effectively neutralize the lethal toxins found in cobra venom. This innovative approach not only promises to revolutionize the treatment of venomous bites but also underscores the capacity of AI to drive significant medical breakthroughs.

The Process:

The process of creating these groundbreaking proteins involved detailed simulations of molecular structures and dynamics, powered by AI algorithms. RFdiffusion and ProteinMPNN, the two AI programs utilized, specialize in predicting protein structures and optimizing molecular properties, respectively. These technologies allowed for rapid and accurate modeling of potential antidotes, significantly reducing the time and resources typically required for drug discovery.

Global Impact:

Snakebite envenoming affects over 2.7 million people annually, leading to more than 100,000 deaths and three times as many amputations and other permanent disabilities. The development of an effective, AI-generated treatment could dramatically change the outlook for millions, particularly in underserved regions of Africa, Asia, and South America. By focusing on a disease largely ignored by the pharmaceutical industry, AI demonstrates its unique potential to democratize healthcare and address global health disparities.

Further Developments by Google DeepMind:

Adding to the excitement in the AI and medical communities, Demis Hassabis, CEO of Google DeepMind, announced that AI-designed drugs are expected to enter clinical trials by the end of 2025. These developments are spearheaded by Isomorphic Labs, a venture initiated by Alphabet, aiming to leverage AI in the creation of new pharmaceuticals. This move signals a significant commitment from one of the tech world’s behemoths to foster innovation in drug development through AI.

Regulatory and Ethical Considerations:

The advent of AI-designed drugs brings with it a plethora of regulatory and ethical considerations. As AI begins to play a central role in developing treatments, it becomes crucial to establish robust frameworks to manage these innovations. These regulatory actions will play a decisive role in shaping the path forward for AI in medicine, ensuring that such innovations benefit society while addressing potential risks and ethical concerns.

The Future of AI in Medicine:

The successful application of AI in developing treatments for neglected diseases could set a precedent for addressing other medical challenges. As AI technologies continue to evolve, their integration into various stages of drug development and healthcare delivery is expected to accelerate, offering promising solutions for diagnostics, personalized medicine, and epidemic management. Moreover, the collaboration between AI experts and biomedical researchers is likely to enhance our understanding of diseases at a molecular level, paving the way for more targeted and effective therapies.

Conclusion:

The creation of an AI-designed treatment for snakebite envenoming is a testament to the transformative potential of artificial intelligence in medicine. This breakthrough not only offers hope for tackling neglected diseases but also exemplifies how AI can be harnessed to foster innovation in healthcare. As we stand on the brink of this new frontier, the continued integration of AI into medical research and treatment holds the promise of reshaping healthcare landscapes around the world, making it more equitable, efficient, and effective. The ongoing developments and the anticipated clinical trials of AI-designed drugs mark the beginning of a new chapter in medicine, one that could redefine possibilities in healthcare delivery and treatment efficacy for years to come.

Call to Action:

As we witness these exciting developments, it is imperative for the medical community, regulatory bodies, and ethical committees to work in unison to guide the responsible integration of AI in healthcare. Embracing these technologies while upholding stringent ethical standards will be key to unlocking the full potential of AI in medicine and ensuring that its benefits are universally accessible.

For further reading:

(3)

Google DeepMind CEO says 2025's the year we start popping pills AI helped invent #ArtificialIntelligence #Medicine #Surgery #Medmultilingua

Climate Change Refugees: The Los Angeles Wildfires as a Global Wake-Up Call

By Dr. Marco V. Benavides Sánchez.

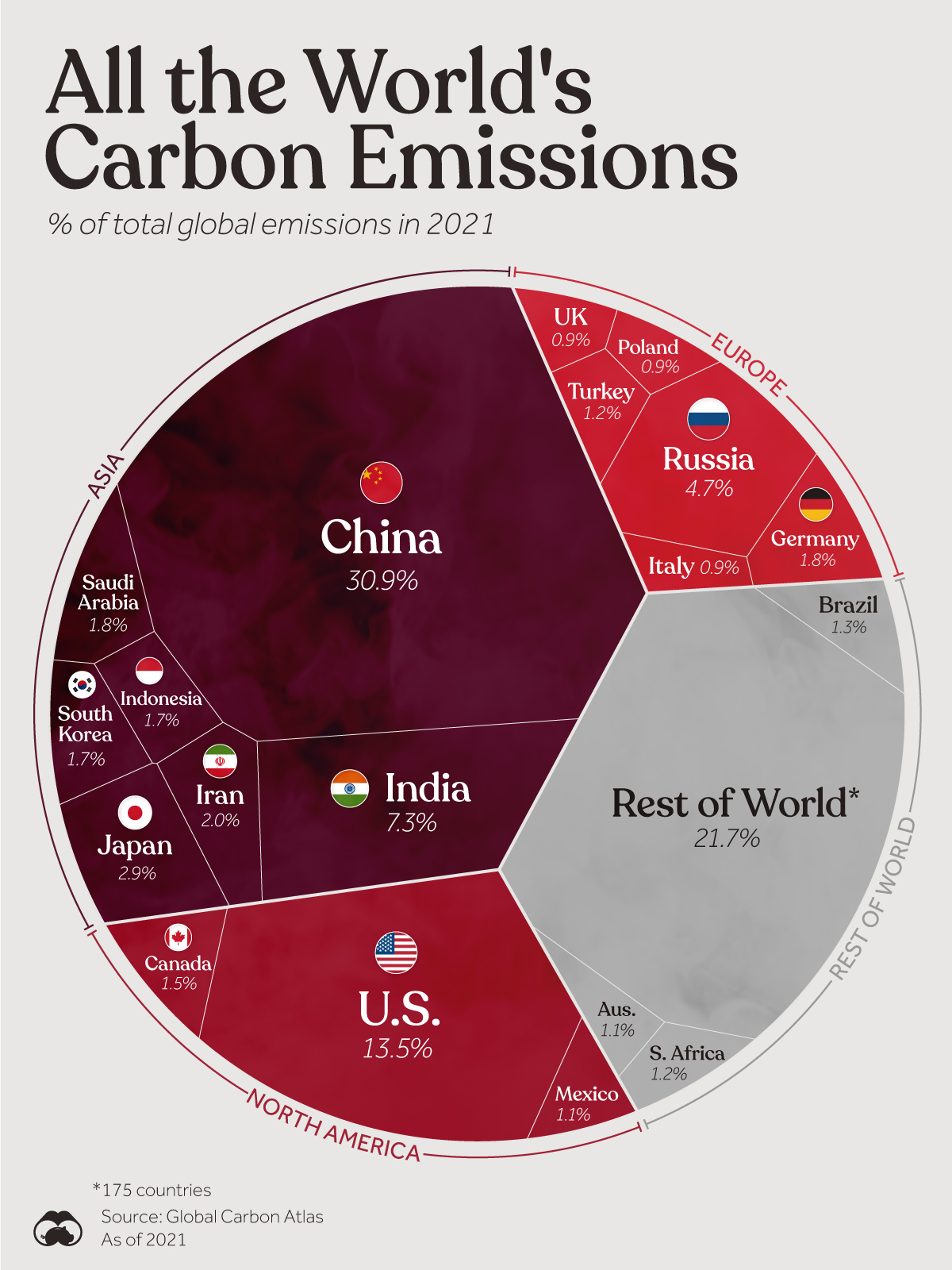

In recent days, the world has been confronted with a stark reality: approximately 150,000 people have been evacuated due to the devastating wildfires in Los Angeles. Images of destroyed homes and displaced individuals are both tragic and overwhelming, but one statement from a victim stands out. Describing their experience, this individual referred to themselves as a “first world climate change refugees.” Not a problem only for poor countries anymore. This phrase captures not only the immediate suffering of those losing everything but also prompts us to reflect on the global consequences of climate change. It reminds us that we are in the midst of a crisis that can no longer be ignored.

The Face of Climate Change

The wildfires in California, particularly in Los Angeles, are not isolated incidents. While wildfire season is a recurring event in the region, the past few years have seen increasingly extreme conditions. The combination of high temperatures, prolonged drought, strong winds, and dried-out vegetation creates the perfect conditions for wildfires to spread rapidly. However, what is most concerning is that these phenomena are being exacerbated by climate change. Rising global temperatures have altered weather patterns, intensifying heat waves, reducing rainfall, and lengthening periods of dryness. What was once considered a seasonal risk has now evolved into a constant and persistent threat that affects not only ecosystems but also human communities.

The term “climate change refugee” is increasingly being used by people who live in areas affected by natural disasters. But it is more than just a phrase—it is a tangible reality for thousands of individuals who are being forced to abandon their homes, lose their livelihoods, and face an uncertain future due to the escalation of extreme weather events. These new displaced persons do not have a country to flee to because their plight is not confined to a local or national crisis; it is a global challenge. The truth is that many of the communities forced to migrate due to climate events are left without adequate protection or support, lacking the legal frameworks that typically govern refugees of war or political persecution.

A Wake-Up Call

The devastating impact of the wildfires in Los Angeles is not just an event that should move us emotionally, but a call to action for humanity. The number of displaced persons, the damage to biodiversity, and the disruption of daily life underscore what could become the future for many regions if we do not act urgently. What is happening in California could happen anywhere in the world. From the Amazon basin to North Africa, entire regions are seeing their ecosystems and ways of life altered by global warming.

Throughout history, migration crises have been driven by war, political persecution, or economic disparity. Today, climate change has been added to that list, transforming into one of the primary causes of forced displacement. However, unlike other types of refugees, those displaced by climate impacts do not always receive the same level of international attention, nor do they have clear legal protections. In many cases, they are stripped of their fundamental rights and left to face the chaos without the support they desperately need. The term “refugee” was never meant to describe the plight of those fleeing environmental catastrophes, and the current legal frameworks for refugees do not adequately address the needs of climate migrants.

A New Kind of Refugee: The Climate Refugee

The “climate refugee” is not a distant or abstract concept. They are real people—just like us—who have dreams, families, and aspirations to live in a habitable world. The climate crisis is not just an environmental issue; it is a question of social, economic, and environmental justice. The wildfires in Los Angeles are merely one manifestation of a global problem that affects us all. Every action counts, and every decision we make today will influence future generations.

The Global Impact of Climate Change and Displacement

The displacement caused by these events has a profound impact on both the affected communities and the global community at large. In Los Angeles, entire neighborhoods have been forced to flee, leaving behind everything they’ve built. The consequences are not just physical but psychological, as people are uprooted from their homes and livelihoods. The destruction of thousands of homes represents not only a loss of property but also the shattering of lives. The human cost of climate change is becoming increasingly evident.

The challenges of displacement and the humanitarian crisis resulting from climate change cannot be ignored. Governments around the world must take responsibility for their role in contributing to the crisis and must work collaboratively to develop solutions. This includes providing support to displaced communities, addressing the root causes of climate change, and creating legal frameworks to protect climate refugees. International organizations, non-governmental organizations, and local governments must work together to ensure that those displaced by climate events are given the support they need to rebuild their lives.

A Call to Action for the Future

As we face an increasingly uncertain future, it is clear that the time for action is now. The wildfires in Los Angeles are just one of many signs that the effects of climate change are already upon us. From devastating floods in Europe to deadly wildfires in Australia, the signs are everywhere. If we fail to take decisive action, the consequences will be far-reaching and irreversible.

The Los Angeles wildfires are a tragic reminder that climate change is no longer a distant concern: it is here and it is already displacing people, whether they are wealthy movie stars or poor migrants displaced by war. The global community must rise to the challenge and work together to protect the most vulnerable, ensure a fair and equitable future for all, and tackle the root causes of climate change head on.

We are at a crossroads. The decisions we make today will determine the world we leave for future generations. If we act decisively and work together, we can begin to turn the tide on climate change, protect those at risk, and ensure that the planet remains habitable for generations to come.

References

(1) Deadly Los Angeles wildfires: 100,000 under evacuation orders as crews report progress

(2) Los Angeles wildfires devour thousands of homes, death toll rises to 10

(3) L.A. wildfire evacuation warnings

(4) Fires devastating Los Angeles grow more slowly as fierce winds die down

(5) Los Angeles fires: More than 10,000 homes and businesses destroyed, at least 10 dead

#ArtificialIntelligence #Medicine #Surgery #Medmultilingua

Jimmy Carter: A Life of Service, Peace, and Humanity

By Dr. Marco V. Benavides Sánchez.

James Earl Carter Jr., known worldwide as Jimmy Carter, was not only the 39th president of the United States but also an unwavering advocate for human rights and a symbol of integrity in politics and public life. From his childhood in Plains, Georgia, to his passing at the age of 100 on December 29, 2024, Carter’s life was marked by an unshakable commitment to service and social justice. This article is dedicated to pay my personal respects to the man, the statesman, the example.

Early Life and Education

Jimmy Carter was born on October 1, 1924, in Plains, Georgia, into a farming family. He grew up during a time of racial segregation and economic hardship, experiences that profoundly shaped his worldview. From a young age, Carter showed a strong interest in learning, excelling in his studies, and developing a deep sense of responsibility.

He attended the United States Naval Academy, graduating in 1946. During his service in the Navy, he met Rosalynn Smith, who became his wife and lifelong companion for over 75 years. After leaving the Navy, Carter returned to Plains to take over the family peanut business, demonstrating a commitment to his local community that would define his later career.

Political Career: The Path to the Presidency

Jimmy Carter began his political career in the 1960s, first serving as a state senator in Georgia and later as governor. During his term as governor (1971–1975), he implemented significant reforms, including initiatives to improve education and eliminate segregationist policies in the state. His progressive stance in a conservative state elevated him to national prominence.

In 1976, Carter launched his presidential campaign, presenting himself as an “outsider” at a time when public trust in government was deeply shaken following the Watergate scandal. His message of honesty and transparency resonated with voters, leading to his narrow victory over President Gerald Ford in a historic election.

The Presidency: Challenges and Achievements

Jimmy Carter’s presidency (1977–1981) was marked by complex challenges and significant accomplishments. During his tenure, he faced economic issues such as inflation and unemployment, as well as international crises that defined his administration.

Camp David Accords

One of Carter’s greatest achievements was his role as a mediator in the Camp David Accords, signed in 1978 between Egypt and Israel. These historic negotiations resulted in a peace treaty between the two nations, ending decades of conflict and setting a precedent for future Middle East peace efforts.

Human Rights as a Cornerstone

Carter took an innovative approach to foreign policy by placing human rights at the center of his decisions. His administration openly criticized authoritarian regimes, promoted democracy, and reduced support for allied dictatorships. While these policies sometimes sparked controversy, they also laid the groundwork for a more ethical approach to international relations.

The Iran Hostage Crisis

The Iran hostage crisis, in which 52 American diplomats were held captive for 444 days, posed a significant challenge to Carter’s presidency. Despite his diplomatic efforts, the hostages were not released until minutes after Ronald Reagan assumed the presidency in 1981. This episode, coupled with a weakened economy, contributed to Carter’s defeat in the 1980 election.

A Life After the Presidency

Carter’s electoral defeat did not mark the end of his career; instead, it signaled the beginning of an even more impactful phase of his life. In 1982, alongside Rosalynn, he founded the Carter Center, an organization dedicated to promoting human rights, eradicating diseases, and resolving conflicts.

The Carter Center: Transforming Lives

The Carter Center’s work ranged from monitoring elections in over 100 countries to combating neglected tropical diseases such as guinea worm disease and trachoma. These initiatives improved the quality of life for millions of people, particularly in the world’s most vulnerable communities.

Nobel Peace Prize

In 2002, Jimmy Carter was awarded the Nobel Peace Prize in recognition of his decades-long humanitarian work and tireless promotion of peace. This honor solidified his reputation as one of the most influential and respected former presidents in history.

Health Challenges and Resilience

In his later years, Carter faced several health challenges, including a diagnosis of metastatic melanoma in 2015. Nonetheless, he demonstrated remarkable strength and optimism, defying medical expectations after receiving innovative treatments. In February 2023, he opted for palliative care, spending his final days at home in Plains, surrounded by his family.

An Unparalleled Legacy

Jimmy Carter leaves behind a legacy that transcends his presidency. His life exemplifies the values of humility, service, and dedication to just causes. Some key aspects of his legacy include:

Champion of Human Rights: Carter raised the standard for human rights in international politics, demonstrating that ethics can align with diplomacy.

Humanitarian Leader:Through the Carter Center, he transformed millions of lives, proving that former presidents can continue to serve humanity meaningfully.

Model of Integrity: In an era of growing distrust toward political leaders, Carter stood as a beacon of honesty and decency.

Global Unifier: His commitment to peace, both as a president and a global citizen, served as a bridge between nations and communities.

Reactions and Tributes

News of his passing prompted a wave of tributes from world leaders, international organizations, and ordinary citizens. Presidents and dignitaries highlighted his work as an example of what a leader can achieve when guided by compassion and a commitment to the greater good.

In Plains, his small hometown, flags flew at half-staff as residents shared personal stories of how Carter had touched their lives, whether directly or indirectly. Rosalynn Carter, who passed away on November 19, 2023, was also remembered as his steadfast partner in both life and work.

Lessons from an Exemplary Life

Jimmy Carter’s life teaches us that power lies not only in the office one holds but in how it is used to benefit others. In an age where cynicism and polarization dominate the political landscape, Carter reminds us of the importance of honesty, hard work, and dedication to public service.

Carter did not merely lead; he inspired. He did not only speak of peace; he lived and actively promoted it. His legacy is not measured solely in history books but in the hearts of those whose lives he touched with his humanity and vision.

Jimmy Carter was more than a former president of the United States; he was a moral leader, a humanitarian, and a tireless advocate for human rights—not only for his country but for many around the world who admired his decency and kindness. His life, marked by extraordinary dedication to public service, continues to inspire future generations. As we bid farewell to this giant of history, we honor his memory and commit to carrying forward his mission of building a more just and peaceful world.

References

(1) Former US President Jimmy Carter dies aged 100

(2) Public officials mourn passing of former President Jimmy Carter

(3) Former President Jimmy Carter dies at 100

(4) Live updates: Former President Jimmy Carter dies at 100

#ArtificialIntelligence #Medicine #Surgery #Medmultilingua

Understanding the Limits of Artificial Intelligence in Complex Biological Systems

Dr. Marco V. Benavides Sánchez.

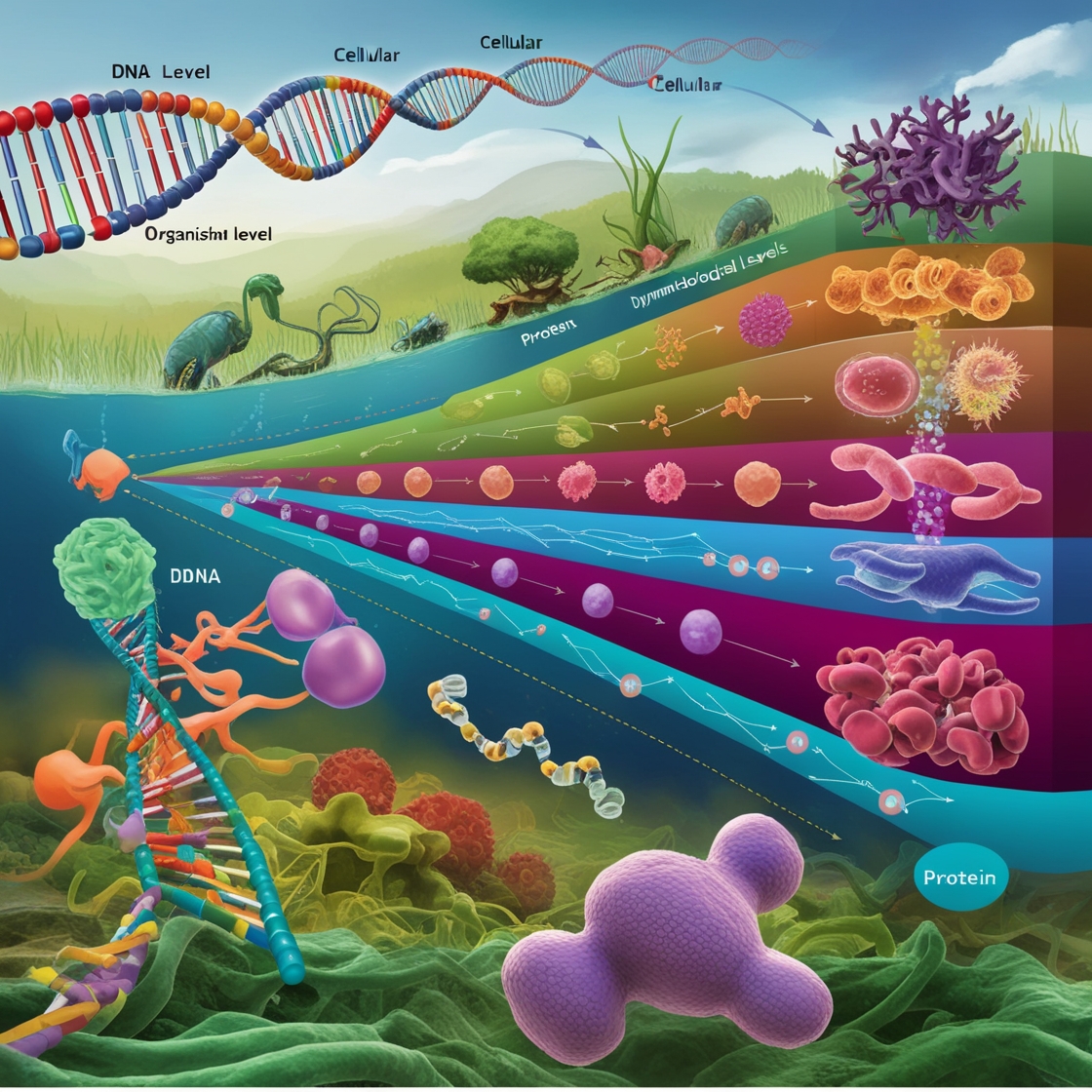

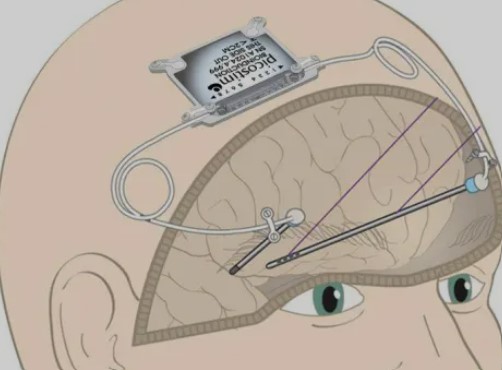

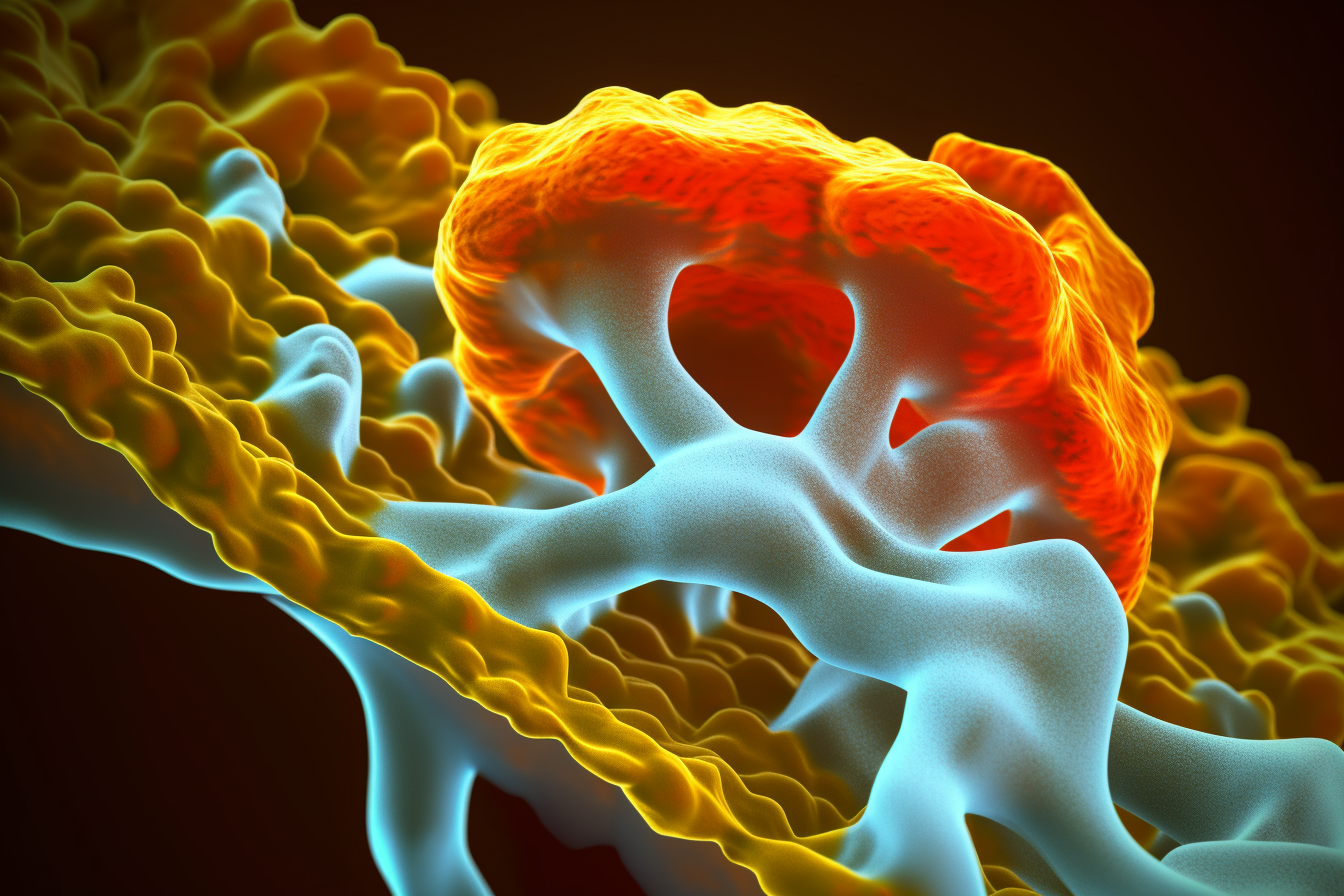

In recent years, Artificial Intelligence (AI) has demonstrated extraordinary progress in fields as diverse as medicine, biology, and engineering. Tools such as AlphaFold, an artificial intelligence system developed by DeepMind, a subsidiary of Alphabet, that predicts the three-dimensional structure of proteins from their amino acid sequence, have revolutionized the field of structural biology and revolutionized our ability to predict protein structures, marking a before and after in biomedical research.

However, despite these achievements, AI faces significant difficulties when it comes to understanding and modeling complex biological systems. These systems, characterized by intricate interactions, dynamic behaviors, and organization at multiple scales, pose significant barriers to current AI capabilities.

The Challenge of Complex Interactions

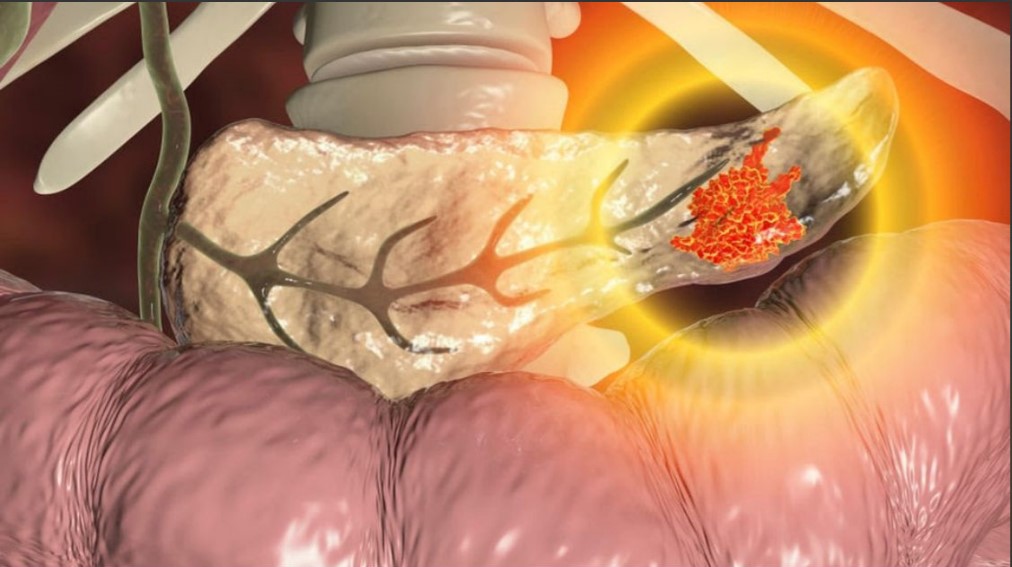

Biological systems are inherently complex due to the numerous interactions between molecules, cells, tissues, and organs. These interactions are often non-linear and context-dependent, which greatly complicates the task of predicting outcomes. For example, the same molecular signaler can have different effects depending on the cellular environment or the time at which it acts. This contrasts with the traditional approach of AI models, which tend to look for static patterns and predictions based on correlations rather than deep causality.

An emblematic case is cancer biology. Although many genetic mutations related to the disease have been identified, the cellular context and the network of interactions in which they participate determine whether or not these mutations lead to tumor formation. This complexity often escapes current algorithms, which often require a large amount of data and a large computational capacity to model even a fraction of these interactions.

The Dynamic Nature of Biological Processes

Unlike other fields of application of AI, biological systems are not static. Biological processes are dynamic and can change over time in response to various stimuli. For example, circadian cycles, changes in metabolic pathways during exercise, or the progression of a disease are temporal processes that require models capable of handling sequential data and adapting to new information.

More advanced AI models, such as those based on deep learning, have shown some success in handling time series data. However, these models face limitations when data is sparse, noisy, or incomplete, common characteristics in biology. In addition, the ability of these models to extrapolate beyond the training data remains a considerable challenge.

Multi-Scale Integration: An Unsolved Problem

One of the greatest challenges in modern biology is integrating data that spans different scales, from the molecular level to the entire organism. For example, to understand how a mutation in a gene affects the behavior of an organism, it is necessary to link events that occur at very different spatial and temporal scales. This task is particularly challenging for AI, as it requires tools capable of synthesizing disparate information and offering a coherent interpretation.

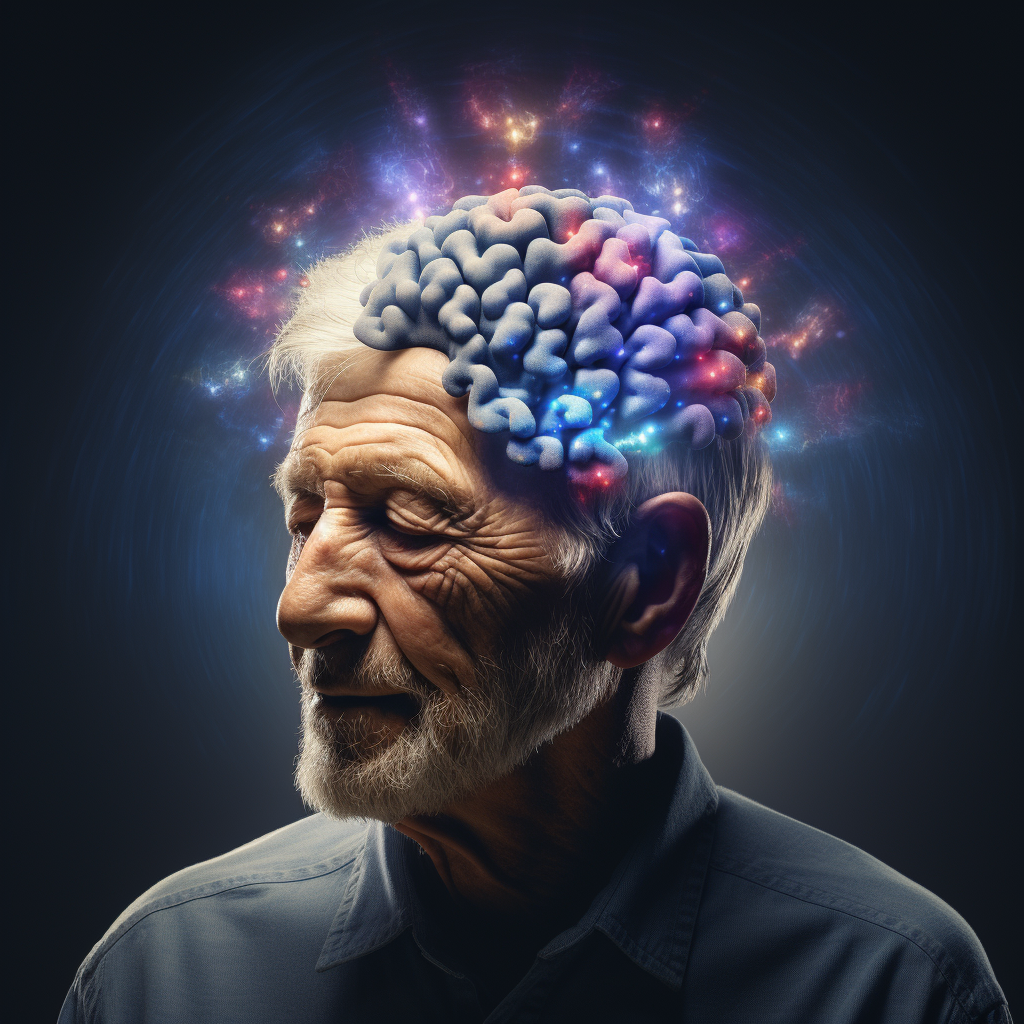

One area where this is particularly evident is research into complex diseases such as Alzheimer’s. Although numerous biomarkers and molecular pathways involved have been identified, it is not yet possible to build a model that captures all relevant levels of interaction. Current AI models often focus on a single scale, such as genomic or neuroimaging data, without properly integrating information coming from other sources.

Data Quality and Availability

This is another significant hurdle. Although biological databases have grown exponentially, most datasets are noisy, incomplete, and heterogeneous. For example, clinical data often include missing information or registration errors, making them difficult to use in AI models.

Furthermore, there is an inherent bias in the available data. Many databases reflect a higher proportion of studies conducted in populations from certain countries or ethnic groups, limiting the global applicability of models trained on these data. AI also struggles to handle less structured types of data, such as medical images or data from wearable biomedical sensors.

Reflections and Future

To overcome these drawbacks, an interdisciplinary approach is needed that combines biological knowledge with advances in AI. In particular, the following areas show great potential:

1. Agentic AI: Agentic artificial intelligence refers to AI systems designed to act as independent agents, capable of making decisions and performing complex tasks with minimal human intervention. Unlike traditional chatbots, which require explicit instructions, agentic AI can anticipate needs and act proactively.

This approach seeks to develop AI systems that can formulate hypotheses, experiment autonomously, and adapt to new data. Agentic AI could be key to addressing the complexity and dynamics of biological systems.

2. Bioinspired AI: Bioinspired artificial intelligence refers to AI systems that are developed taking as a reference the principles and mechanisms of biological systems. This approach seeks to replicate the efficiency, adaptability, and robustness of natural processes in technological solutions.

Incorporating principles of biology into the design of algorithms could improve their adaptability and robustness. For example, neural networks that emulate the behavior of biological neural networks could offer new insights.

3. Collaboration between Sciences: Collaboration between biology and computational sciences has led to significant advances in various areas of science and medicine. This interdisciplinarity is known as bioinformatics or computational biology.

This collaboration is essential to develop more integrated models. The creation of international consortia that share standardized data could help overcome current limitations in data quality.

4. Hybrid Techniques: Combining artificial intelligence (AI) with traditional mathematical modeling approaches can significantly improve the ability to capture the complexity of biological systems. These hybrid techniques leverage the strengths of both approaches to provide a more complete and accurate understanding. Combining AI with more traditional mathematical modeling approaches could make it possible to capture the complexity of biological systems more accurately.

Conclusion

AI has revolutionized our ability to tackle biological problems, but there is still a long way to go to fully understand complex biological systems. Recognizing current limitations is not only essential to advancing the field, but also to setting realistic expectations about what AI can and cannot achieve.

The promise of AI in biology is immense, but so is the need to develop new tools, methods, and interdisciplinary collaborations. The future of AI in biology depends not only on technical improvements, but also on our ability to integrate knowledge and build more coherent models that reflect the inherent complexity of life.

References

(1) Vert, J.-P. (2024). Unlocking the mysteries of complex biological systems with agentic AI. MIT Technology Review. Recovered from MIT Technology Review.

(2) Soha Hassoun, Felicia Jefferson, Xinghua Shi, Brian Stucky, Jin Wang, Epaminondas Rosa, Artificial Intelligence for Biology, Integrative and Comparative Biology, Volume 61, Issue 6, December 2021, Pages 2267–2275, https://doi.org/10.1093/icb/icab188

(3) Dehghani, N., & Levin, M. (2024). Bio-inspired AI: Integrating biological complexity into artificial intelligence. arXiv. https://doi.org/10.48550/arXiv.2411.15243v1

#ArtificialIntelligence #Medicine #Surgery #Medmultilingua

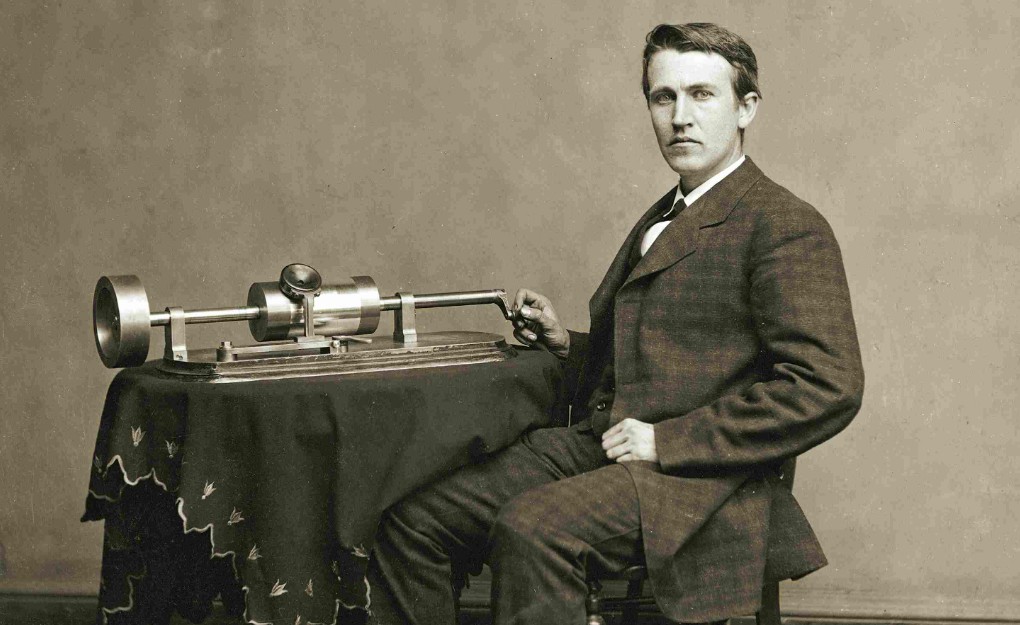

Thomas Edison's Phonograph: The Device that Gave Sound to Silence

Dr. Marco Vinicio Benavides Sánchez

The Accidental Genesis of Sound Recording

Thomas Edison, often more magician than inventor in the public eye, stumbled upon the idea of the phonograph during experiments with telegraphic messages. Picture Edison, whose days were spent in relentless pursuit of innovation, observing the rhythmic indentations on paper tape by a telegraph machine. It was here, amidst the dots and dashes of long-distance communication, that Edison envisioned the wild possibility of sound waves leaving their own set of indelible marks. The leap from silent signals to sound recording wasn't obvious, and yet, for Edison, it seemed almost inevitable.

Inspired, Edison theorized that just as physical marks represented telegraph messages, so too could they embody the vibrations of sound. This moment of insight led to the birth of the phonograph. The device used a diaphragm with an embossing point that pressed against a rapidly moving cylinder wrapped in tin foil—a rudimentary setup by today’s standards, but at the time, it was nothing short of sorcery.

"Mary Had a Little Lamb": The First Echo

The phonograph’s first successful demonstration involved Edison reciting the nursery rhyme "Mary had a little lamb" into the machine. When the machine regurgitated his words back to him, it was not just a nursery rhyme that echoed in the room, but the resounding possibility of a new world. Historical accounts vary, with some marking this momentous test as occurring on August 12, 1877. Regardless of the exact date, the impact was timeless.

Imagine the scene: a group of hardened engineers, grown men who had their doubts, huddling around this mechanical ensemble as Edison’s voice, slightly tinny but undeniably real, spilled into the air. It must have seemed like a trick—a voice, unattached to a body, floating through the room! The initial amusement quickly gave way to awe as the implications of this invention began to dawn on them.

The Phonograph’s Mechanics and Marvel

The phonograph itself was a masterpiece of simplicity. It consisted of two main components—a recording mechanism and a playback mechanism, both using diaphragm-and-needle assemblies. Sound waves would cause the recording diaphragm to vibrate, creating indentations on the foil-covered cylinder. To play back the sound, the needle would trace these grooves, vibrating the playback diaphragm and recreating the sound waves in the air.

This was groundbreaking, not merely for its novelty but for its potential. Before the phonograph, music was as fleeting as the breeze; once played, a melody vanished into memory. Now, sound could be captured, stored, and relived at will. The ramifications were vast—from entertainment to education, and beyond.

Spinning Forward: The Legacy of Edison’s Phonograph

The phonograph spun a future where music became a mainstay in homes, creating the first record companies and seeding an industry that would evolve into today’s global music behemoth. It was the phonograph that first made the private consumption of music possible, predating playlists and podcasts by decades.

Moreover, the phonograph paved the way for future audio technologies—everything from the radio to digital streaming owes a nod to Edison’s tin-foiled experiment. It transformed the music industry, yes, but it also touched every sphere where sound held sway. Imagine audiobooks, voice mails, and multimedia—the seeds for all these modern conveniences were sown by Edison’s phonograph.

Reflecting on Sound and Silicon

Today, as we casually summon songs from sleek devices that fit in our pockets, it’s almost comical to think of Edison’s bulky, foil-wrapped cylinders. Yet, there’s a certain charm in the scratchy, imperfect sound of those first recordings—a reminder of the time when technology was as much about wonder as utility.

In retrospect, Edison’s phonograph wasn’t just an invention; it was a proclamation that sound could be more than an ephemeral pleasure—it could be a tangible, tradable commodity. It set the stage for a world where sounds are as savable, shareable, and ubiquitous as the air we breathe. Indeed, from a world silenced by the limitations of memory and distance, Edison offered us a ticket to an audible eternity.

This nostalgia for sound captured, the marvel of a voice from the past reaching through time to speak to us, remains one of the phonograph’s most enduring legacies. In the grand narrative of technological progress, the phonograph stands as a humble giant, its echoes still heard, however faintly, in every corner of our sound-saturated lives.

References:

(1) Edison's Invention of the Phonograph (2) History of the Cylinder Phonograph #ArtificialIntelligence #Medicine #Surgery #Medmultilingua

Sesame Street: A Look Back at Its Revolutionary Premiere on November 10, 1969

By Dr. Marco Vinicio Benavides Sánchez

On November 10, 1969, a revolutionary new show hit the airwaves, changing the landscape of children’s television forever. Sesame Street, conceived by the visionary minds of Joan Ganz Cooney and Lloyd Morrisett, premiered on this day, introducing a colorful community of humans and Muppets that would become household names worldwide. As we celebrate over five decades of this iconic show, let’s take a nostalgic look back at its origins, impact, and enduring legacy.

A Bold Beginning

In the late 1960s, the concept of educational television for children was a novel idea. Joan Ganz Cooney and Lloyd Morrisett saw an opportunity to harness television's vast potential as a teaching tool, aiming to bridge the educational gaps in preschool-aged children, especially those from low-income families. Produced by the then-named Children's Television Workshop, now Sesame Workshop, Sesame Street broke the mold by combining education with entertainment in unprecedented ways.

The Sesame Formula: Education Through Entertainment

Sesame Street's format was groundbreaking. It cleverly mixed live-action, puppetry, and animation, crafted to engage children while teaching them critical pre-academic skills. The show introduced characters like Big Bird, the 8-foot-tall canary; Elmo, the little red monster; and Cookie Monster, whose voracious appetite for cookies is matched by his love for learning letters. These Muppets, created by the legendary Jim Henson, were not just entertaining; they were also vehicles for education, addressing literacy, numeracy, and social skills.

Pioneering Diversity and Inclusion

From its inception, Sesame Street was a pioneer in diversity and inclusion. Its urban setting, modeled after a New York City neighborhood, and its multicultural cast, both human and Muppet, reflected the real-life diversity of its audience. This was part of its core mission: to represent all children, from all backgrounds, learning and playing together.

Tackling Social Issues

Beyond academic lessons, Sesame Street never shied away from tackling complex social issues in a way that children could understand. From the very beginning, it addressed everything from disability, race, and poverty, to more recent issues such as parental incarceration and autism, teaching children empathy and understanding.

Global Reach and Adaptations

Sesame Street’s influence extends far beyond the United States. With local versions in over 150 countries—from “Plaza Sésamo” in Latin America to “Sesamstraße” in Germany—the show's core themes of kindness, respect, and learning transcend cultural and linguistic barriers, making it a global phenomenon.

Legacy and Impact

The impact of Sesame Street is immeasurable. It has won numerous awards, including multiple Emmys and a Peabody Award. Educational research has consistently shown that Sesame Street has made significant contributions to the educational achievements of countless children around the world. It has also set the standard for how media can be used as a force for good in education.

A New Era

Even after more than fifty years, Sesame Street continues to innovate and adapt. The digital age has brought new challenges and opportunities, and Sesame Street has evolved, embracing new platforms and technologies to reach a new generation of viewers. Now available on HBO and PBS Kids, it continues to expand its educational outreach, ensuring that it remains relevant and accessible to all.

Celebrating Sesame Street

As we celebrate the anniversary of this pioneering show, we not only remember its past but also look forward to its future. Sesame Street remains a beloved jewel in the crown of children’s television, continuing to educate, inspire, and delight children and adults alike. It is a testament to the vision of its creators and the enduring appeal of its characters that today, just as in 1969, Sesame Street is a place where every child can feel valued and loved.

In a world where learning and play go hand in hand, Sesame Street continues to teach us all the most important lesson: how to be better human beings. Here’s to many more years of learning, laughter, and Sesame Street.

To read more about:

(1)

Sesame Street, newly revamped for HBO, aims for toddlers of the Internet age (2)

PBS KIDS to Add New Half-hour SESAME STREET Program on Air and on Digital Platforms This Fall #ArtificialIntelligence #Medicine #Surgery #Medmultilingua

55 Years of the Internet: The Challenges and the Possibilities

By Dr. Marco Vinicio Benavides Sánchez

On October 29, 1969, at the University of California, Los Angeles (UCLA), a team led by Professor Leonard Kleinrock achieved a technological milestone by sending the first message over ARPANET, the precursor to what we know today as the Internet.

This innovation, initially conceived by the U.S. Department of Defense during the tension of the Cold War, aimed to create a robust communications network capable of surviving a nuclear attack.

This network not only survived but also evolved, transforming into the global infrastructure that today connects billions of devices and redefines entire sectors, significantly including medicine.

Technological Evolution and Digital Expansion

Since the 1960s, the development of technologies such as time-sharing and packet switching optimized the use and efficiency of computing resources, catalyzing an era of unprecedented digital expansion.

The arrival of the World Wide Web in 1991, created by Tim Berners-Lee, further democratized access to information and revolutionized social and business interactions globally. This evolution has allowed businesses to expand their reach beyond physical borders and for people to explore new forms of communication and collaboration.

Key Elements of the World Wide Web:

- HTTP (Hypertext Transfer Protocol): This protocol facilitates the secure and efficient transfer of web pages between servers and browsers.

Impact of the Internet on Medicine

The integration of the Internet has transformed medicine in profound ways. Telemedicine has removed numerous geographic barriers, allowing doctors and specialists to treat patients in remote locations with the same diligence as if they were physically present. In addition, access to large medical databases has accelerated clinical research, facilitating discoveries in treatments and diagnoses that would have previously taken decades.

Current Challenges and Artificial Intelligence

With the advent of Artificial Intelligence, medicine is on the threshold of a revolution. Computationally and energy-intensive AI models are reshaping everything from diagnosis to healthcare management. However, the integration of this technology also faces significant challenges, such as the need to ethically handle huge volumes of sensitive patient data, which raises serious questions about privacy and consent.

The Internet and the Future

Organizations such as ICANN and the IETF play crucial roles in the administration and proper functioning of the Internet. While ICANN ensures the uniqueness of names and numbers on the network, the IETF develops and maintains the standards and protocols that enable the Internet to function harmoniously globally.

Looking ahead, it is imperative that research and development continue, not only to overcome the technical and ethical challenges presented by AI, but also to ensure that advances in medicine and other areas are accessible to all, contributing to a more equitable and healthy future.

Conclusion

On this 55th anniversary of the Internet, we recognize a trajectory marked by both impressive achievements and significant challenges. The Internet has revolutionized the way we live, work, and interact, offering almost limitless possibilities for improving global health and human well-being.

As advances are made, it is crucial that we all ensure that technological innovations are developed responsibly and ethically. The history of the Internet is a testament to human innovation, showing enormous potential to overcome challenges and transform them into opportunities for collective advancement.

This anniversary not only celebrates a milestone, but also reminds us that we continue to explore the depths of what is possible when curious and creative minds connect across this vast network of networks.

To learn more:

(1) The Original HTTP as defined in 1991 (2) Digital Governance: An Assessment of Performance and Best Practices (3) INTERNET PROTOCOL. DARPA INTERNET PROGRAM. PROTOCOL SPECIFICATION (4) The beginnings of the Internet

(5) Introduction to links and anchors (6) The Difference Between The Internet and World Wide Web (7) The size and growth rate of the Internet (8) Brief History of the Internet #ArtificialIntelligence #Medicine #Surgery #Medmultilingua

- HTML (Hypertext Markup Language): This is the standard language used to create and design pages on the web.

- URLs (Uniform Resource Locators): These are the addresses that allow access to various resources

on the web, acting as bridges to information.

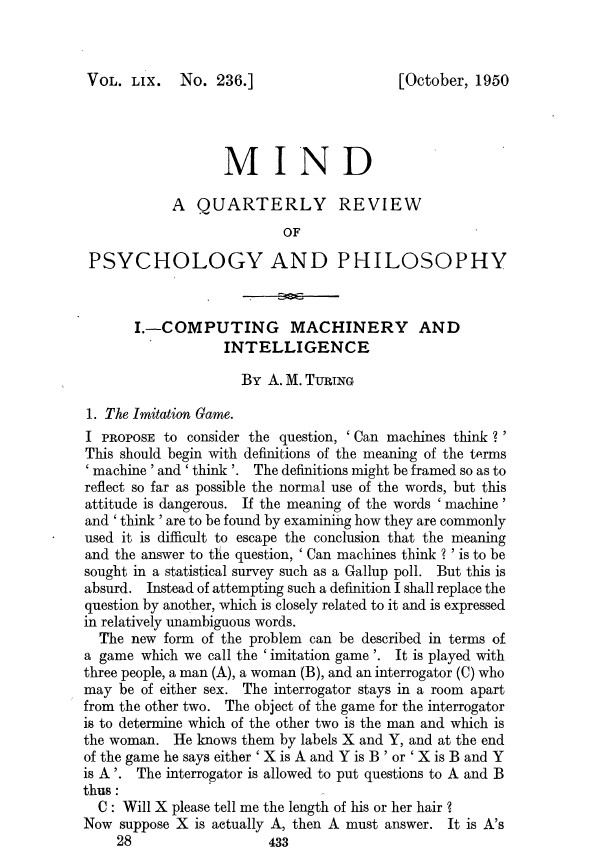

Alan Turing's Pioneering Influence on Artificial Intelligence and Its Impact on Modern Medicine

By Dr. Marco Vinicio Benavides Sánchez

In October 1950, Mind magazine published an article that would change the course of technology and whose reverberations would be felt in countless fields, including medicine. This article, "Computing Machinery and Intelligence," written by Alan Turing, not only introduced what we now know as the Turing Test, but also laid the philosophical and technical foundations for what would eventually become known as artificial intelligence (AI).

The Imitation Game

Turing begins his exploration with a simple but deeply provocative proposition: the imitation game. This game, which would later be renamed the Turing Test, involves a human interrogator who must determine, through questions and answers, which of two participants is human and which is a machine. The underlying premise is that if a machine can imitate human intelligence to the point of being indistinguishable, then it could be said to “think.”

Can Machines Think?

Turing’s article does not stop at the game proposition, but instead unfolds a broader discussion about the possibility of machines thinking. Turing sidesteps the trap of defining thought and instead redefines the question to whether machines can do well at the imitation game. If a machine can trick a human into thinking it is human, then under this operational definition, the machine is thinking.

Digital Computers and Their Universality

Another revolutionary concept that Turing introduces is the universality of digital computers. He explains how these machines, if properly programmed, have the potential to perform any calculation that can be described algorithmically. This concept of universality is what today enables computers to do everything from steering smartphones to managing life support systems in hospitals.

Learning Machines

Perhaps one of the most forward-thinking points for his time was Turing's discussion of learning machines. He proposed that, with tweaks to their programming, machines could eventually improve their performance based on past experiences, an idea that foreshadows what we now call machine learning algorithms and neural networks.

Objections and Responses

Turing didn't just propose ideas; he also anticipated and refuted objections to the idea of thinking machines, from theological to philosophical to mathematical arguments. His meticulous defense of the potential ability of machines to display intelligent behavior remains a testament to his forward-thinking vision.

Citation: Turing, A. M. (1950). Computing machinery and intelligence. Mind, 59, 433–460.

Impact and Legacy

Turing's legacy is immense and extends far beyond theory. In modern medicine, his ideas have paved the way for developments in artificial intelligence that are now reshaping everything from medical diagnostics to robotic surgery. The ability of machines to learn from large data sets can be seen in applications ranging from disease prediction to personalizing treatments for patients.

Furthermore, the Turing test remains a fundamental metric in evaluating artificial intelligence, challenging and motivating generations of scientists and technicians to think about how machines interact with and mimic human capabilities.

Turing’s vision, therefore, not only shaped the field of artificial intelligence, but also helped shape the future of medicine—a field where precision and efficiency can directly translate into saved and improved lives. So, while we celebrate Turing’s contributions, we also recognize the vast landscape of possibilities his work continues to unlock in medicine and beyond.

So, this article is a tribute to Alan Turing's lasting legacy and a reflection on his impact on modern medicine, demonstrating how a pioneering idea can cross decades and disciplines, changing the world in unpredictable and wonderful ways.

For further reading:

(1) Computing Machinery and Intelligence - University of Maryland ....

(2) Computing Machinery and Intelligence (Alan Turing).

(3) Computing machinery and intelligence. - APA PsycNet.

(4) Alan Turing’s “Computing Machinery and Intelligence” - Springer.

#Emedmultilingua #Tecnomednews #Medmultilingua

Masamitsu Yoshioka in 1941 with a Japanese bomber plane. Photo Credit: Yoshioka family photo.

Masamitsu Yoshioka, the Last Pearl Harbor Bombardier, Dies at 106

Dr. Marco V. Benavides Sánchez - October 5, 2024

Masamitsu Yoshioka, the final surviving crew member of the approximately 770 men who made up the Japanese air fleet that attacked Pearl Harbor on December 7, 1941, passed away at the remarkable age of 106. His death marks the end of an era, one that recalls the fateful event which brought the United States into World War II. Yoshioka, who was just 23 years old when he participated in the attack, rarely spoke publicly about his role in one of the most infamous moments of modern history, which had far-reaching consequences for Japan, the United States, and the world at large.

The news of Yoshioka’s death was shared on August 28 by Takashi Hayasaki, a Japanese journalist and author, who had met with Yoshioka in the past year. Hayasaki posted on social media, expressing the deep and thought-provoking nature of their conversation: “When I met him last year, he spoke many valuable words with a dignified presence. Have Japanese people forgotten something important since the end of the war? What is war? What is peace? What is life? Rest in peace.”

Yoshioka, who lived in the Adachi ward of Tokyo, had spent nearly eight decades reflecting on his participation in the attack. He often visited the Yasukuni Shrine to pray for the souls of his fallen comrades, including the 64 Japanese soldiers who died in the Pearl Harbor attack. Japan's losses during the operation included 29 aircraft and five submarines. Despite these moments of reflection, Yoshioka avoided the spotlight, remaining largely silent about the brief but monumental 15 minutes over Pearl Harbor on that fateful day.

Pearl Harbor and Its Impact

The attack on Pearl Harbor remains etched in history, not just because it triggered the United States' entry into World War II, but because of its sheer audacity. On the morning of December 7, 1941, Japanese aircraft descended upon the American naval base in Hawaii, unleashing a coordinated and devastating assault. It was this event that President Franklin D. Roosevelt would famously call "a date which will live in infamy."

Yoshioka was a bombardier on one of the planes involved in the attack. His mission was to drop a torpedo, but a twist of fate saw it strike the unarmed battleship U.S.S. Utah. The ship had been designated as a target to avoid because it had been demilitarized under the 1931 London Naval Treaty. Nevertheless, 58 crew members aboard the Utah were killed when the torpedo struck. For Yoshioka, the Utah was an accidental target, one that he would reflect on later in life with deep regret.

In an interview with Jason Morgan, an associate professor at Reitaku University, Yoshioka confessed to feeling ashamed for having been the only one from his crew to survive and live such a long life. “I’m ashamed that I’m the only one who survived and lived such a long life,” Yoshioka said in the interview for Japan Forward in 2023.

Japanese attack on Pearl Harbor, 1941. Photo Credit: Unbekannt/Library of Congress/Wikimedia Commons CC0 1.0

Reflecting on the Past

Yoshioka carried the weight of his survival throughout his long life. He often pondered the lives of the men who perished on both sides of the conflict, acknowledging the shared humanity of soldiers who were, at the time, simply doing their duty. In the same interview, when asked if he had ever considered visiting Pearl Harbor, Yoshioka initially responded that he "wouldn’t know what to say." However, he eventually admitted that he would like to visit the graves of the men who died in the attack, and “pay them [his] deepest respect.”

Throughout the war, Yoshioka continued to serve, though luck often seemed to be on his side. After surviving the Pearl Harbor attack, he returned to the aircraft carrier Soryu. In June 1942, however, when the Soryu was sunk in the Battle of Midway, Yoshioka was on leave. His fate led him to other critical moments of the war. In 1944, he was stationed in the Palau Islands but was recuperating from malaria in the Philippines during the brutal Battle of Peleliu. His luck extended further when his plane was grounded due to a shortage of spare parts just as Japan began ordering kamikaze attacks on Allied ships in the Pacific.

Yoshioka also participated in the attack on Wake Island just days after Pearl Harbor, on December 11, 1941, and he was involved in a raid in the Indian Ocean in early 1942. According to Professor Morgan, Yoshioka took part in numerous campaigns that were, as he described, efforts “for the liberation of Asia from white colonialism.” When Emperor Hirohito announced Japan’s surrender in August 1945, Yoshioka was stationed at an airbase in Japan.

Masamitsu Yoshioka in 2023, when he was 105. Photo Credit: Jason Morgan/Japan Forward

Life After the War

After Japan’s defeat, Yoshioka returned to civilian life. He worked for the Japan Maritime Self-Defense Force, which was established as a replacement for the Imperial Japanese Navy. Later, he worked for a transport company. His post-war years were largely quiet, as he refrained from speaking openly about his wartime experiences. Born on January 5, 1918, in Ishikawa Prefecture in western Japan, Yoshioka had joined the Imperial Japanese Navy at the age of 18. His early years in the Navy were spent working on ground crews, maintaining biplanes and other aircraft. It wasn’t until 1938 that he began training as a navigator, and a year later, he was posted to the Soryu, which was deployed to fight against the Nationalist Chinese forces.

In August 1941, Yoshioka and his air crew were assigned to torpedo training. Due to a shortage of actual torpedoes, they practiced using dummy wooden canisters filled with water, with only one real armor-piercing projectile available. When the Soryu set sail from the Kuril Islands on November 26, 1941, the destination was kept secret from the crew. The only instruction they received was to pack shorts, an indication that they were heading to warmer climates.

Yoshioka recalled feeling honored to have been selected for such a critical mission but admitted to hoping he would survive to return home. Openly expressing such a sentiment during that time, however, could have been seen as subversive, as military personnel were often issued pistols for the purpose of suicide to avoid capture.

When Yoshioka and his crew were finally informed that their target was Pearl Harbor, many were stunned. Yoshioka remembered the moment clearly: “When I heard that, the blood rushed out of my head. I knew that this meant a gigantic war, and that Hawaii would be the place where I would die.”

The 110-minute flight to Pearl Harbor was tense, and Yoshioka described the moment of dropping his torpedo as surreal. He recalled seeing the explosion of seawater as the torpedo hit the Utah. As the plane flew over the ship’s deck, Yoshioka realized they had hit a demilitarized training ship, which had been unarmed for a decade.

Reflecting on the attack in his later years, Yoshioka spoke with regret and sorrow, not just for the men who died on the ships they targeted, but also for the men on his own side who were lost during the war. "They were young men, just like we were," he said.

For further reading:

(1)

Masamitsu Yoshioka, Last Surviving Pearl Harbor Bombardier, Dead at 106.

(2)

Masamitsu Yoshioka, last of Japan’s Pearl Harbor attack force, dies at 106.

(3)

Masamitsu Yoshioka, airman thought to be last survivor of Japanese ....

#Emedmultilingua #Tecnomednews #Medmultilingua

Sir Alexander Fleming (6 August 1881 – 11 March 1955)

The Sequencing of Alexander Fleming’s Original Penicillin-Producing Mold: A Journey into Antibiotic History and its Modern Implications

Dr. Marco V. Benavides Sánchez - October 2, 2024

The discovery of penicillin by Alexander Fleming in 1928 remains one of the most significant milestones in medical history. This antibiotic, derived from the Penicillium mold, revolutionized healthcare by offering a powerful tool to combat bacterial infections, saving millions of lives. Nearly a century later, a collaborative effort by researchers from Imperial College London, CABI (Centre for Agriculture and Bioscience International), and the University of Oxford has taken this historical achievement a step further. For the first time, they have successfully sequenced the genome of the original Penicillium mold that Fleming used to develop the world’s first antibiotic.

This remarkable achievement not only pays tribute to Fleming's groundbreaking discovery but also has important implications for the future of antibiotic production. In this article, we will explore the historical context of penicillin, the scientific process of genome sequencing, and how this new research can influence modern medicine and industry.

The Historical Significance of Fleming's Discovery

The story of penicillin begins in 1928 when Alexander Fleming, a Scottish bacteriologist, was working at St. Mary’s Hospital Medical School in London. During one of his experiments involving bacteria, Fleming noticed something unusual: a petri dish that had accidentally been exposed to air had developed a blue-green mold. Around the mold, the bacteria had stopped growing. Intrigued, Fleming identified the mold as belonging to the genus Penicillium. His experiments soon revealed that the mold produced a substance capable of killing a wide range of bacteria.

This substance, named penicillin, was the first true antibiotic. Prior to this discovery, bacterial infections like pneumonia, meningitis, and sepsis were often fatal. Antibiotics, including penicillin, have since revolutionized medical treatment, leading to the development of many other life-saving drugs.

Fleming’s accidental discovery in 1928 had a profound and lasting impact on medicine. However, the strain of Penicillium that Fleming used to produce the first samples of penicillin remained frozen and largely untouched for over fifty years. It wasn’t until recently that a team of scientists decided to unlock the genetic secrets of this mold through genome sequencing.

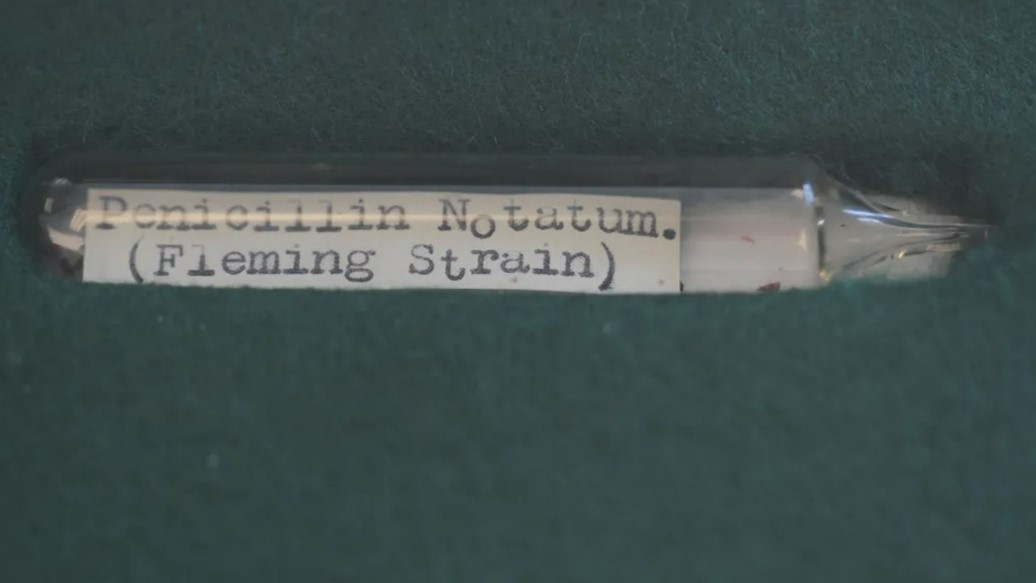

The original Penicillium strain used by Alexander Fleming is stored at CABI’s culture collection in Egham, England. Credit: CABI.

The Genome Sequencing of Fleming’s Original Penicillium Strain

The original Penicillium strain used by Alexander Fleming is stored at CABI’s culture collection in Egham, England. Credit: CABI.

The Genome Sequencing of Fleming’s Original Penicillium Strain

The genome sequencing of Alexander Fleming’s original Penicillium strain represents a major scientific achievement. Using samples that had been carefully preserved and frozen for decades, researchers were able to extract DNA from the mold and sequence its genome for the very first time. Genome sequencing is a complex process that involves determining the exact order of the nucleotides in an organism's DNA, effectively creating a "blueprint" of its genetic makeup.

For the team of researchers, this project had both historical and scientific value. On the one hand, sequencing the genome of the original Penicillium strain used by Fleming provided a direct link to one of the greatest medical discoveries of the 20th century. On the other hand, the project allowed for a deeper understanding of how Penicillium molds produce penicillin at a molecular level.

Once the genome of Fleming’s Penicillium strain was successfully sequenced, the research team compared it to the genomes of two industrial strains of Penicillium from the United States. These industrial strains are used in modern factories to produce large quantities of penicillin, which is still widely used as an antibiotic today.

Comparative Analysis: Fleming’s Mold vs. Modern Industrial Strains

The comparative analysis between Fleming’s original mold and the modern industrial strains revealed some fascinating insights. While both types of Penicillium were capable of producing penicillin, the genetic differences between them highlighted how the process of antibiotic production has evolved over time.

One of the most significant findings from the study was the difference in the regulatory genes between the two strains. Regulatory genes play a crucial role in controlling the production of enzymes and other proteins within an organism. In the case of the industrial strains from the US, researchers found that these strains contained more copies of the regulatory genes responsible for penicillin production compared to Fleming’s original strain. This means that the industrial strains are able to produce larger quantities of penicillin, making them more efficient for mass production.

However, despite these differences, the core genes responsible for producing penicillin remained similar between Fleming’s mold and the modern strains. This suggests that the basic mechanism by which Penicillium molds produce penicillin has remained largely unchanged since Fleming’s time, even though industrial strains have been optimized for higher output.

Differences in Enzyme Production: A Closer Look

Another key discovery from the genome sequencing project was the variation in the genes that code for penicillin-producing enzymes. While the regulatory genes were similar between the UK and US strains of Penicillium, the enzymes involved in the actual production of penicillin showed differences. These variations likely arose as a result of natural evolution.

The research team speculated that Penicillium molds in the UK and the US evolved slightly differently due to environmental factors. These subtle genetic changes led to the production of slightly different versions of the enzymes that create penicillin. Although both versions are effective at producing the antibiotic, understanding these differences could open up new avenues for improving penicillin production.

Industrial Implications: Toward More Efficient Antibiotic Production

The sequencing of Fleming’s original Penicillium mold has important implications for the pharmaceutical industry, particularly in the field of antibiotic production. One of the primary goals of the research was to identify ways in which modern penicillin production could be made more efficient.

By understanding the genetic differences between Fleming’s strain and the industrial strains, scientists can potentially develop new methods for optimizing antibiotic production. For example, the discovery that US strains have more copies of certain regulatory genes suggests that increasing the number of these genes in other strains could lead to higher penicillin output.

Furthermore, the differences in enzyme production between the UK and US strains could provide valuable information for creating more effective antibiotics. Although penicillin remains a powerful antibiotic, the rise of antibiotic-resistant bacteria poses a significant challenge to global health. Researchers are constantly searching for new antibiotics or ways to improve existing ones. The genome sequencing of Fleming’s mold may offer insights that lead to the development of more potent or efficient antibiotics in the future.

Fleming’s sample in a tube. Credit: CABI.

Honoring Fleming’s Legacy and Looking to the Future

Fleming’s sample in a tube. Credit: CABI.

Honoring Fleming’s Legacy and Looking to the Future

The successful sequencing of Alexander Fleming’s original Penicillium mold is more than just a scientific achievement; it is a tribute to one of the most important medical discoveries in history. Fleming’s discovery of penicillin paved the way for the modern era of antibiotics, transforming healthcare and saving countless lives, turning him into a true benefactor of our species.

As antibiotic resistance continues to threaten global health, the need for new antibiotics and improved production methods has never been more urgent. The insights gained from the genome sequencing of Fleming’s mold could play a crucial role in addressing these challenges. By understanding the genetic mechanisms behind penicillin production, scientists may be able to develop more efficient ways of producing antibiotics, ensuring that we have the tools needed to combat bacterial infections for generations to come.

In conclusion, the genome sequencing of the original Penicillium mold used by Alexander Fleming is a remarkable achievement that bridges the past and the future. It allows us to revisit one of the most important discoveries in medical history while also offering new possibilities for improving antibiotic production in the present day. As researchers continue to explore the genetic secrets of Penicillium, We can look forward to a future where antibiotics will remain a powerful weapon in the fight against bacterial infections.

For further reading:

(1)

Genome of Alexander Fleming's original penicillin-producing mold ....

(2)

Comparative genomics of Alexander Fleming’s original Penicillium ....

(3)

Genome of Fleming’s original penicillin-producing mould ... - CABI.org.

(4)

Genome of Alexander Fleming’s Original Penicillin Mold Sequenced for ....

(5)

Genome of Alexander Fleming’s original penicillin-producing mould ....

#Emedmultilingua #Tecnomednews #Medmultilingua

The Traditional Mexican Diet: A Healthy and Environmentally Sustainable Culinary Legacy

Dr. Marco V. Benavides Sánchez - September 21, 2024

Mexico is famous for its rich culture, vibrant traditions, and flavorful cuisine. While modern Mexican food is often associated with indulgent dishes like tacos, burritos, and quesadillas, the traditional Mexican diet is built on a foundation of ancient ingredients and methods that are not only delicious but also highly nutritious. For centuries, corn, beans, squash, and chilies have formed the cornerstone of Mexican cuisine, providing essential nutrients while celebrating the region’s biodiversity.

Recent research has highlighted the numerous health benefits of the traditional Mexican diet, from improved heart health to reduced risks of chronic diseases. Beyond personal health, the diet also offers ecological advantages due to the sustainability of its ingredients. In this article, we will explore the staple foods of traditional Mexican cuisine, their health benefits, the environmental implications of these foods, and the growing challenge of balancing traditional and modern eating habits in Mexico.

1. Staple Foods in Mexican Cuisine

Traditional Mexican cuisine revolves around a core set of ingredients, many of which have been cultivated in Mexico for thousands of years. These foods are not only the essence of Mexican dishes but also provide essential nutrients that promote health.

1.1 Corn (Maíz)

Corn is a foundational food in Mexico, domesticated over 9,000 years ago. It is a versatile grain used to make tortillas, tamales, and other staple dishes. Corn provides carbohydrates for energy and dietary fiber, promoting digestion and blood sugar regulation. When combined with beans, corn forms a complete protein, delivering essential amino acids needed for healthy body function.

1.2 Beans (Frijoles)

Beans are another dietary staple in Mexican cuisine. Common varieties include black beans, pinto beans, and kidney beans, all of which are rich in plant-based protein. Beans are high in fiber, promote digestive health, and provide important vitamins and minerals such as folate, iron, and magnesium. Paired with corn, they create a nutritionally balanced meal with all essential amino acids.

1.3 Peppers (Chiles)

Chiles, or peppers, are used both for flavor and health benefits in Mexican cuisine. From jalapeños to habaneros, chiles are packed with antioxidants and vitamin C. The capsaicin in chiles is responsible for their spicy heat and is known for its anti-inflammatory and metabolism-boosting properties.

1.4 Tomatoes (Tomates)

Tomatoes are indispensable in Mexican cooking, appearing in everything from salsa to stews. They are rich in vitamins A and C and contain lycopene, a potent antioxidant associated with reduced risks of heart disease and certain cancers. Tomatoes add not only flavor but also essential nutrients to a wide variety of dishes.

1.5 Squash (Calabacita)

Squash, such as zucchini and pumpkin, is another staple in Mexican cuisine. It is a rich source of vitamins A and C, fiber, and antioxidants that support immune function, digestive health, and skin vitality.

1.6 Other Vegetables

Mexican dishes frequently feature a wide array of vegetables such as onions, avocado, radishes, cabbage, and chayote. These ingredients not only add layers of flavor but also contribute to a balanced diet with important vitamins, minerals, and antioxidants. Avocados, in particular, are rich in heart-healthy fats and fiber.

1.7 Herbs and Spices

Herbs and spices like oregano, epazote, cumin, and cinnamon enhance Mexican cuisine with complex flavors. These herbs also provide health benefits, such as oregano’s antibacterial properties and cinnamon’s ability to help regulate blood sugar levels.

1.8 Fresh Fruits

Fresh fruits such as papaya, mango, pineapple, and guava are common in Mexican cuisine, offering natural sweetness and a wealth of vitamins, particularly vitamin C, along with fiber and antioxidants.

2. Health Benefits of the Traditional Mexican Diet

The traditional Mexican diet is not only flavorful but also remarkably nutritious, offering numerous health benefits.

2.1 Nutrient-Rich Composition

The pairing of corn and beans is one of the most balanced nutritional combinations in the world. Corn provides complex carbohydrates for sustained energy, while beans deliver protein and essential micronutrients like folate and iron. Together, they form a complete protein, offering all the essential amino acids required for proper body function.

2.2 Anti-Inflammatory Properties

Several components of the traditional Mexican diet, such as chilies, tomatoes, and spices, possess anti-inflammatory properties. Capsaicin in peppers helps reduce inflammation, while cumin and cinnamon have been noted for their potential to reduce blood sugar levels and inflammation.

2.3 Heart Health

Corn tortillas, a staple of the Mexican diet, are lower in calories and have a lower glycemic index compared to refined wheat flour tortillas, making them a healthier option for heart health and blood sugar management. The inclusion of healthy fats from avocados and omega-3-rich seafood in coastal regions also contributes to cardiovascular health.

2.4 Reduced Risk of Chronic Diseases

Research has shown that following a traditional Mexican diet may reduce the risk of chronic diseases like heart disease, obesity, and certain types of cancer. The diet’s emphasis on plant-based, whole foods like beans, corn, and vegetables helps improve cholesterol levels, reduce inflammation, and enhance insulin sensitivity.

2.5 Cultural and Emotional Well-Being

Beyond its nutritional value, the traditional Mexican diet holds deep cultural significance. Eating traditional foods can foster a sense of connection to heritage, family, and community, promoting overall well-being.

3. Environmental Considerations

The traditional Mexican diet is not only beneficial for personal health but also for the environment. Its focus on locally sourced, minimally processed ingredients helps reduce the environmental impact of food production.

3.1 Sustainable Ingredients

Staples such as corn, beans, and squash are often grown using sustainable agricultural practices that require fewer resources like water and fertilizers. These crops are well-adapted to Mexico’s climate and help preserve biodiversity, making them more sustainable than industrial farming practices.

3.2 Minimal Processing

Traditional Mexican foods are typically made from minimally processed ingredients, which lowers the energy needed for food production. For example, the nixtamalization process used to make corn tortillas enhances the nutritional value of corn without the need for heavy processing.

3.3 Diverse Agriculture

Mexico’s rich agricultural diversity supports a variety of fruits, vegetables, and herbs. This biodiversity helps maintain healthy ecosystems, reduces reliance on monoculture farming, and supports small, local farmers.

3.4 Fish and Seafood

In Mexico’s coastal regions, fish and seafood play a significant role in the diet. By supporting local fishing communities and promoting sustainable fishing practices, the traditional Mexican diet helps protect marine ecosystems and reduce the carbon footprint of imported seafood.

4. Challenges: Traditional vs. Modern Diets

As with many cultures worldwide, Mexico faces the challenge of balancing its traditional diet with modern eating habits, which increasingly include processed foods and fast food.

4.1 The Rise of Processed Foods

In recent decades, the popularity of processed foods, high in sugars, unhealthy fats, and refined carbohydrates, has surged in Mexico. The rise of fast food and convenience foods has contributed to increasing rates of obesity, diabetes, and other chronic diseases in the population.

4.2 Balancing Tradition and Convenience

Encouraging the consumption of traditional Mexican foods in modern society is crucial. While traditional meals are often time-intensive to prepare, efforts to adapt these dishes for modern lifestyles can help people maintain healthier eating habits. Education campaigns about the nutritional benefits of traditional foods and the cultural significance of these meals can help combat the allure of fast food and promote a return to healthier eating patterns.

Conclusion

The traditional Mexican diet is much more than a collection of delicious dishes—it is a holistic approach to food that nourishes both the body and the environment. By relying on nutrient-dense, plant-based ingredients such as corn, beans, chilies, and tomatoes, this diet offers a wealth of health benefits, from improved heart health to reduced risks of chronic diseases. Furthermore, the environmental sustainability of traditional Mexican ingredients, many of which are locally sourced and minimally processed, contributes to preserving Mexico’s rich biodiversity and supporting local farming communities.

However, as Mexico confronts the growing influence of fast food and processed foods, it is essential to strike a balance between modern convenience and traditional values. By celebrating the cultural significance of the traditional Mexican diet and promoting its health benefits, there is an opportunity to inspire a healthier future while preserving the environmental and cultural heritage of Mexican cuisine.

The next time you enjoy a warm corn tortilla, spicy salsa, or a bowl of hearty beans, you are not just savoring a meal—you are partaking in a culinary tradition that has nourished generations and continues to offer timeless health and environmental benefits.

To learn more:

(1)

Mexican Food is Healthy: A Dietitian Explains.

(2)

10 Mexican Foods with Health Benefits.

(3)

Eight of Mexico’s Healthiest Foods You Can Eat Today.

(4)

Mexican national dietary guidelines promote less costly and ....

(5)

Mexican Diet: Nutritional and Health Benefits - Make their Day.

#Tecnomednews #Emedmultilingua #Medmultilingua

The Viking 2 Mission: A Historic Journey to the Red Planet

Dr. Marco V. Benavides Sánchez - September 3, 2024

On September 3, 1976, the Viking 2 lander touched down on the vast plains of Utopia Planitia on Mars, marking a pivotal moment in space exploration. This mission, part of NASA's ambitious Viking program, was designed to explore the Martian surface and atmosphere, search for signs of life, and pave the way for future missions to our enigmatic planetary neighbor. Viking 2's success was built on the foundations laid by its predecessor, Viking 1, but it also blazed new trails in our understanding of Mars. This chronicle delves into the journey, challenges, and scientific achievements of the Viking 2 mission, a project that remains a landmark in space exploration history.

The Genesis of the Viking Program

The Viking program was conceived during the 1960s, a decade marked by rapid advancements in space technology and exploration. NASA, buoyed by the success of the Apollo missions to the Moon, turned its attention toward Mars, the fourth planet from the Sun and the most Earth-like in our solar system. The idea of sending a spacecraft to Mars to search for signs of life captivated both scientists and the public. The Viking program, consisting of two identical spacecraft, Viking 1 and Viking 2, was born out of this vision.

The Viking program's primary objectives were clear: to obtain high-resolution images of Mars' surface, characterize its atmosphere and weather patterns, analyze its soil, and search for any possible signs of life. Each spacecraft was equipped with an orbiter and a lander, both carrying a suite of scientific instruments designed to achieve these goals. After the successful launch and subsequent landing of Viking 1 on July 20, 1976, all eyes turned to Viking 2, the program's second attempt at unraveling the mysteries of the Red Planet.

Launch and Journey to Mars

Viking 2 was launched on September 9, 1975, from Cape Canaveral Air Force Station in Florida. The spacecraft was propelled by a Titan IIIE-Centaur rocket, a powerful vehicle capable of escaping Earth's gravity and embarking on the long journey to Mars. The launch was a complex and delicate operation, requiring precise calculations to ensure that the spacecraft would reach Mars at the right time and place. The journey to Mars spanned nearly a year, covering a distance of over 400 million kilometers.

The spacecraft carried both an orbiter and a lander. The orbiter was designed to enter Mars' orbit, while the lander would descend to the surface. During the voyage, the spacecraft's systems were carefully monitored and adjusted by NASA's team of scientists and engineers. The journey was not without its challenges. Spacecraft traveling such vast distances must navigate the complex gravitational fields of the Sun and other planets, avoid collisions with micrometeoroids, and maintain the integrity of their systems in the harsh environment of space. Despite these challenges, Viking 2's journey was remarkably smooth, a testament to the engineering prowess of its creators.

Arrival and Orbital Insertion

After nearly a year in space, Viking 2 arrived at Mars on August 7, 1976. The orbiter's engines fired to slow the spacecraft down, allowing it to be captured by Mars' gravity and enter into a stable orbit. This maneuver, known as orbital insertion, was a critical step in the mission. The orbiter needed to be in the correct orbit to relay data from the lander back to Earth and to conduct its own scientific observations of the Martian surface and atmosphere.

Once in orbit, the Viking 2 orbiter began its mission of taking high-resolution images of the Martian surface. These images were crucial for selecting a suitable landing site for the Viking 2 lander. Scientists needed to find a location that was both scientifically interesting and safe for landing. After careful analysis, Utopia Planitia was chosen as the landing site. This large plain, located in Mars' northern hemisphere, was of great interest because of its relatively smooth surface and its proximity to the north polar ice cap, where water – a key ingredient for life – was believed to be present.

The Moment of Truth: Landing on Mars

On September 3, 1976, the Viking 2 lander separated from the orbiter and began its descent toward the Martian surface. The descent was a critical phase of the mission, fraught with danger and uncertainty. The lander had to navigate through the thin Martian atmosphere, which provided little resistance to slow it down. To ensure a safe landing, the spacecraft used a combination of aerodynamic braking, parachutes, and retrorockets. As it approached the surface, the lander's onboard computers continuously adjusted its descent trajectory, responding to the shifting winds and atmospheric conditions.